YWR: Late night at Imperial

I ended up going alone.

I had two tickets to the AI night at Imperial College and had expected one of two daughters would be interested in joining me.

But nope. No interest.

It actually turned out better that they didn’t go.

I loved the AI event and learned a lot, but what I had to do to get the information, and the techniques I had to use, would have been too embarrassing for them.

The UK’s MIT

A bit of background. Imperial is the UK’s version of MIT. It was part of an early 20th century initiative to make sure the UK was always at the leading edge of technical innovation. The concept was that Imperial would drive research and innovation which would benefit British Industry. To this day Imperial is science focused, but also practical. Like if MIT and Devry had a baby. If you want to read Latin, go to Oxford. If you want to build robots, go to Imperial.

And in terms of real estate they are blessed. It’s a nice campus in South Kensington just off of Hyde Park. Students there are hanging out in some of the most expensive real estate in the world.

Imperial Lates

‘Imperial Lates’ are open events held in the evening where the public are invited to visit the campus and interact with graduate students about their projects.

It was my first time attending one, so I didn’t realise the format. It’s held in Imperial’s Main Entrance event space on Exhibition Road. It’s a fun set up with a DJ and a bar with drinks. But then all around the event space are Imperial graduate students standing in front of tables presenting their projects.

There were no talks or keynote speakers. You have to go up and talk to the students and find out what they are working on. Many of these students don't speak English well, and also don’t really know how to articulate what they are working on. Or more accurately, they know exactly what they are working on, but don’t know how much a person walking in off the street can handle. Do you say the whole thing, or pitch it at a basic level?

Most of them started out at the basic level, but if you pulled the string it got a lot more interesting. I’m good at that, so I enjoyed it. But it’s why I’m glad my kids weren’t there.

Table 1: Neutrinos and the Higgs Boson Particle

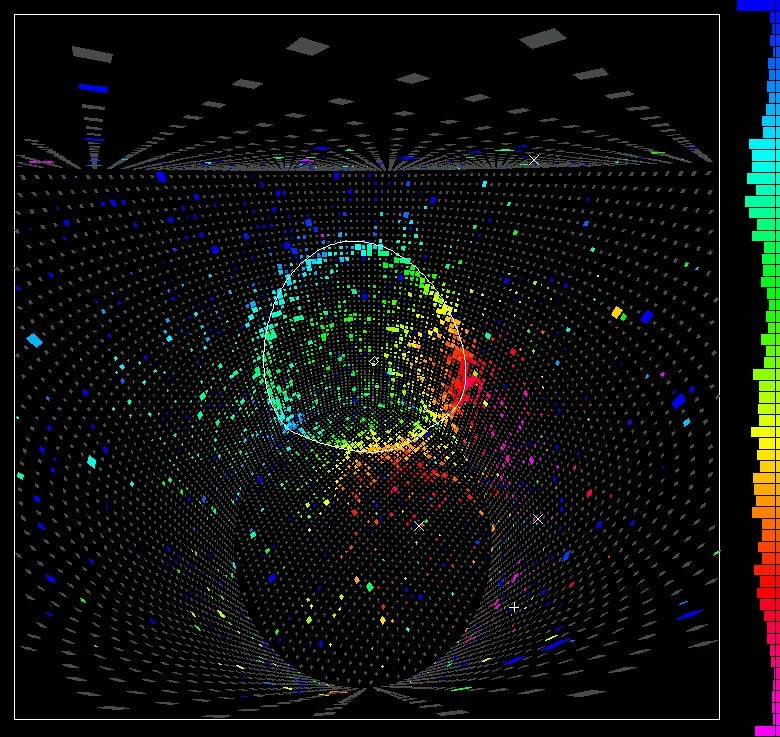

The event was relatively crowded, the tickets were free, and I didn’t really care what I learned, so my strategy was to talk to anyone who didn’t have a crowd around their table. The first table I went up to had a young man standing in front of an interesting display of neutrinos on a screen.

This Phd student’s table was showing how AI is used to analyse the light cones emitted by neutrino reactions at the Super Kamiokande (Super-K) Observatory in Japan. The Super-K observatory is an old mine in Japan which they have filled with ultra pure water to do neutrino particle experiments.

The key physics concept is that nothing can travel faster than the speed of light in a vacuum, but the speed of light under water is a lot slower. Under water it is possible for other particles to outrun light. In the Super K observatory they create bursts of light and which create a pattern of neutrinos on the opposite wall. It’s called the Cherenkov Effect. Read about it yourself.

The display was meant to show that AI was being used to stitch together all the information coming in from the sensors on the walls of the mine to create 3D visualisations of neutrinos. He said AI is very good at rendering this data into a 3D space. He then explained that AI is increasingly useful in medical imaging.

We don't think about it, but MRI machines are really detecting changes in how radio waves interact with atoms in our body. At some point these variations in radio waves have to be processed by a computer to get the end result we want, which is a picture of our brain on a computer display. AI helps with the stage before the image, which is signal processing.

But as we spoke more, it turned out the importance of AI in taking pictures of neutrinos was not really what this Phd student was working on. What he really wanted to know is what happened to all the anti-matter?

Standard particle theory is that matter and anti-matter are created equally. Or at least they are supposed to be created equally, but in real life we rarely see anti-matter.

Why is that?

Where is it?

Or, is something wrong with the original theory? Is the theory simplifying something that is actually more complex?

This Phd thinks the answer likely lies in the discovery of the Higgs Boson particle, and that is what he is working on. This is the same particle from the popular book, The God Particle.

I told him I thought this all sounded interesting, but super theoretical, and possibly kind of useless, like Black Holes. He said that wasn’t the case. Figuring out sub-atomic particle structures can have a big effect in new material development. This could lead to new materials used for quantum computing. At that point I wished him luck with his Phd and his project to discover something new about the Higgs Boson particle.

We’ll do the takeaways at the end.

Table 2: Generative AI and Superhero Cats

At the next table two graduate students were showing off a machine learning model they had created which could create a picture of any super hero you wanted using prompts.

‘A flying cat with a cape and muscles’ I typed.

It was their own AI image generator. The output was the same as what you can find elsewhere, but interesting that two graduate students built one too.

It was a bit off-topic, but I got into a discussion with this student about the importance of data lakes in AI. I wanted to know if it really is important to assemble the data in one place, or could the AI go out and create the data lake itself?

It was kind of a loud event, and I’m not sure he completely heard what I was asking. What this student told me was this:

In a sense the AI engines are the easy part. Developers of AI like to show off image generation because it is easy. An image is just a series of pixels with numeric colour values. He said it is more difficult to run AI models on data with a mix of different datatypes, especially categorical data.

I was asking about AI in finance and he was saying there will be a lot of value in assembling large data sets that have been cleaned and formatted to work together.

I understood this pain point. Maybe you’ve run into this yourself. It can be frustrating how much time is spent on formatting data sets.

For example, one data set might call the UK the ‘UK’, while another data set lists England, Wales and Scotland separately. You then have to write code to make it all the same. Or, one time series uses date/month/year while another uses month/date/year. And with all of these things you usually don't find the problems until later when the analysis doesn’t work.

This student was saying getting all the data cleaned, in one place and ready for AI/machine learning has a lot of value, and it is under appreciated.

Table 3: Micro-Robotics

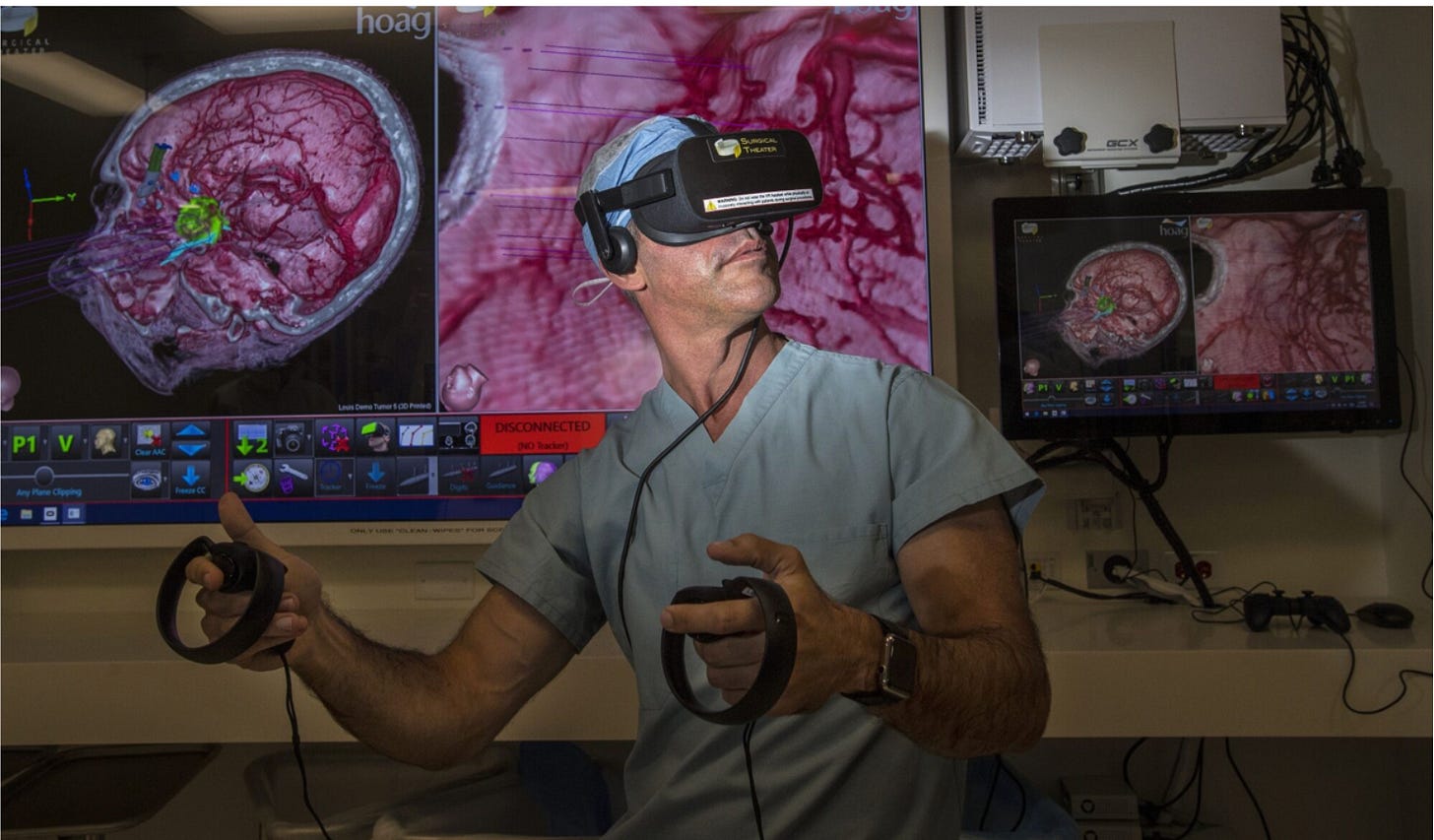

The final table I visited looked interesting because it had several robotic arms, some VR goggles and a mock human brain.

The display was showing how the next step of robotic surgery is to incorporate AI and VR. The VR helps the surgeon to have more depth perspective than a 2D screen.

But like with the physics Phd student, they weren’t really working on VR goggles and surgery. That was just something interesting to show the public.

Really, they were working on micro-robots, controlled by magnets, which go into your circulatory system and repair tissue directly. I told him that was nuts. He said ‘You have to be able to envision the future first to be able to create it.’

Interestingly, as part of this micro-robot challenge he was having to learn about lithography. He needed to find a way to create a microscopic 3-D maze for the robots so he could learn how to drive them in the human circulatory system. He and his partner could do it in 2D, but had never driven a robot using magnets in a 3D microscopic maze. To build a microscopic 3D maze, you need a lithography machine. These are the same machines used to make semiconductors. And that was what he would be doing the next morning. Learning about lithography.

I was really impressed.

‘You are building micro-robots that will drive around the body and repair human tissue.’

‘Yes'

‘Are other people working on this?’

‘I’m not sure.’

‘Have you ever been approached by a VC?

‘What is a VC?’

Wow.

The takeaways

I was struck by how many of these students were Chinese. Where are they going with these inventions after they graduate? Do they create UK or US start-ups, or do they go back to China?

It came up a lot how AI is being used in the medical industry. I don’t really follow the medical industry, but this made me wonder if I should be.

The students were using AI to solve problems across a wide array of disciplines. The existing challenges were still there, but AI seems to be speeding up the process of finding the answers.

The presentations were a peek under the covers at things which are still on the lab bench and 10 years out. I was getting a look into the future at things we don’t even know people are working on. And it made me think there is more cooking at these universities than we realise.

Erik, that’s great, but you aren’t answering the key question.

I know.

How do we make money on this?

Here’s what I think.